Looking back on 2024 can help plan for the biggest 3D art trends we’ll see in 2025. It’s been an incredible year in 3D art, game art and film VFX, and there are no signs of progress slowing as we enter 2025. The best laptops for 3D modelling are driving AI forward, with productivity, design, and generative AI being the main focus of the world’s foremost manufacturers. Apple’s M4 Max laptop continues to push standards forward with a particular focus on 3D artists.

Nvidia also continues to drive AI GPU development forward as it pivots its leading edge gaming GPUs towards creatives and 3D artists. What has begun in 2024 will continue into 2025. More powerful hardware will make it possible for 3D visualisers to generate more complex and realistic results that were previously limited to large film and animation studios. The day of the indie artist, game dev, filmmaker competing with larger studios is becoming more of a reality.

The best 3D modelling software is latching onto hardware and now delivers features that streamline creative workflows. In some areas, this will lead to a reduction in creativity and freedom, whereas other industries will be able to achieve more in less time.

My roundup of the trends we expect to see in 2025 includes changes to the way we create as well as how we interact with content. 3D artists will have power in their hands like they’ve never had before and consumers will engage with it through their phones, VR headsets and various other wearables. For some more predictive fun, read our must-know digital art trends for 2025 and our game design trends for 2025.

1. Unreal Engine 5 will continue to impress

We’ve written extensively about everything you need to know about Unreal Engine 5 as well as how a stunning Unreal Engine 5 tech demo generated intense debate recently. Unreal Engine 5 will continue to cement itself as the go-to option for game developers, but filmmakers, visualisers and animators will adopt Epic Games’ engine like never before in 2025.

We will see a continued shift away from traditional packages as artists embrace real-time alternatives that are significantly more versatile and adaptable. The recent game announcements at The Game Awards showed just how embedded UE5 is in game development, but as we discovered this year, from Amazon Prime’s Fallout to animated series Max Beyond, more filmmakers are turning to Unreal Engine 5 and its going to grow in 2025.

Likewise, more live events are making use of real-time tools, such as Frameless, and that too will continue next year, with the recently announced Interstellar Arc, an interactive Las Vegas experience to rival the Las Vegas Sphere.

One thing to note, there are rivals looking eat into UE5’s dominance. The newly revealed open-source Dagor Engine impresses, and the developer Gaijin Entertainment believes its engine betters UE5 for realism, at least in the narrow use of first-person shooter games. But there’s also Unity 6, just launched and clawing back its users after some poor pricing decisions. And finally, PUBG creator PlayerUnknown has a new game engine that lets you create ‘an Earth-scale world generated in real time’ – called Preface: Undiscovered World, its free to try on Steam.

2. Generative AI will become more usable

The pros and cons of generative AI are well documented. Our own list of cons includes the fact that results can be frustrating, subscriptions are expensive, training data is full of bias, and the results are inconsistent. These are serious problems that will increasingly be eased out of generative AI software in 2025.

We will also see more AI tools that are ethically founded so that artists intellectual property is not taken advantage of or misused – Adobe is continuing its push for AI credential tags and fairness in copyright use, with new tools like Project Know How being used to track image uses and ownership. Image and video generators will also become more predictable and results easier to customise.

Importantly 2025 should see the moment the AI bubble bursts and creator-first AI tools become more dominant, as the ‘prompt jockey’ era comes to an end and artists – particularly 3D creatives and animators working in VFX and game development see real world use-cases for AI overtake simple image generation.

3. Virtual production grows

LED volume stages have begun to completely revolutionise filmmaking, with greenscreens becoming almost redundant overnight. If it wasn’t for the cost, these LED walls would be on every single film set in 2025.

Unreal Engine and Unity are making it possible to create virtual sets like never before and we should begin to see filmmakers using AI art tools like Adobe Firefly or other AI platforms to quickly generate scenes live on set. As AI tools become more controllable, with output ring-fenced by style guides set by art directors, this could become more common.

This would seriously increase creative freedom during filming, if the AI results can really be tailored – it’s something Adobe, for example, is focusing all of its efforts on with platforms like Project Concept AI.

4. Digital humans in real time

Epic Games’ MetaHuman was released in 2021 but it’s stayed fairly stagnant in its features ever since. This has left room for the likes of Reblika Software to launch an application called Reblium, this enables users to quickly create and customise a diverse range of digital human characters and easily import them into Unreal Engine.

I expect that digital humans will become quicker and easier to generate, with the possibility of them being created in real-time. This could transform the way characters are created in games or films.

MetaHuman has been developed considerably in the last two years, likely from increased demand and competition. But also new procedural and AI tools are being added to existing 3D software to enable these character models to be easily animated, all the best animation software will continue to add and promote procedural tools to make like easier, from automatically rigging models to referencing from video.

5. Large worlds will be easier to create

We’ve begun to see companies like Cybever develop tools that utilise generative AI to create vast worlds in 3D. It is already possible to generate 3D models from simple sketches, but 2025 will likely see an increase in both quality and speed.

Tools will increase in features, putting an almost unbelievable level of control into the hands of artists. There will also be a firm commitment to the resulting models being game-engine ready with the ability to export multiple levels of detail and textures.

It won’t happen in 2025, but you’re going to also hear more noise around AI platforms replacing game engines as an innovative and cheap way to generate detailed open worlds for gaming.

We’ve already seen how Tencent’s GameGen-O looks like the first step towards an AI game engine that can create open worlds from prompts. Google has unveiled a world model called Genie 2 – both aim to generate worlds with object interactions, complex character animation, real-time physics NPC AI.

Both are blunt tools that look to create worlds but are unable to let developers craft stories, build detail and finesse pacing or game design ideas. That’s why 2025 will be noisy for gen AI world builders, but are some ways off real world uses.

6. Markerless motion capture will be the new norm

We’ve become accustomed to filmmakers requiring fancy tracking objects and markers on actors. These will be a thing of the past because, after years of research and development, Move has completely disrupted the industry.

In 2024, the Move launched Move One single-camera motion capture, Move Pro multi-camera motion capture, and Move Live real-time motion capture and post-processing. These represent the beginning of a new wave of tools that will make it easier than ever to generate motion capture data from any source.

Move is not the only studio pushing this tech. Established mocap brands like Vicon and Radical also have markerless motion capture, and expect this tech to be refined and become more commonly used in 2025.

7. VR/AR will become increasingly commonplace

The Apple Vision Pro was released at the beginning of 2024 but most people felt that wearing one made you look pretty goofy. This doesn’t seem to have slowed the hype around new ways of engaging with reality, though. Developments have been further encouraged by Adobe Firefly and Lightroom coming to Apple’s mixed reality headset.

With Nvidia pushing AI through their cards, we’ll see more use-cases, AI-driven experiences, and a greater number of mixed reality apps. Very few of these will be the finished article but will rather demonstrate what could be possible in the future.

Though Apple Vision Pro hasn’t sold in great numbers, perhaps due to its steep price tag in the face of Meta’s affordable and nicely specced Quest 3, and Sony‘s PSVR 2 has suffered from a lack of games, as we noted during Black Friday when the tech comes down in price people snap it up. Expect some price drops in 2025 on the best VR headsets as well as a new generation of creative apps, like Shapelab 2025, the innovative 3D sculpting tool PC VR.

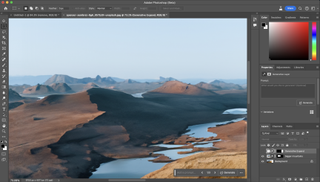

8. Adobe will invest further in 3D tools

With Substance tools leading the charge, Adobe is catering for 3D artists like never before – its 3D Viewer for Photoshop is unbelievably powerful. Adobe is also working closely with Maxon – creator of Cinema 4D and ZBrush – to create its 3D apps and plugins.

Adobe has announced over 100 new features that streamline and enhance workflows. Features including text to 3D AI, developments in 3D Substance View, as well as Project Neo – one of the best web browser 3D modelling app – will continue to drive the 3D industry forward.

Another tool that will likely gain traction is Project Scenic, launched as a part of Adobe MAX’s Sneaks 2024, which makes 2D generative AI image creation easier by enabling artists to build 3D scenes using copilot prompts.

Adobe’s Alexandru Costin, Vice President of Generative AI and Sensei at Adobe, told us in an exclusive interview: “AI has the potential to democratise creativity, putting powerful tools in the hands of everyone, from professionals to small business owners, the world’s largest enterprises and students.”

He adds: “It is equally important that we also consider how we innovate. Responsible innovation is our top priority whenever we launch a new AI feature.”

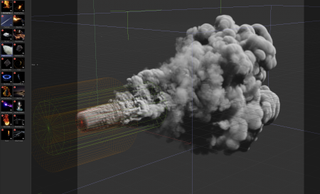

9. Improvements in VFX simulation

Simulation tools will continue to go from strength to strength thanks to AI, new procedural developments and more advanced computational models. This will enable artists to generate simulated water, smoke, and fire like never before.

It’ll also be possible to create advanced and complex results thanks to more advanced processors in desktop PCs and laptops, which can handle complicated calculations – and if, as expected, Nvidia launches its new GPU and continues to develop its Studio tools, we can all benefit.

These improved simulations will also be more accessible in real-time engines, including Unreal Engine and Unity, as plugins like ZibraVDB for Unreal Engine enable film-quality VFX and simulation to be brought into game engines.

10. Low-poly art is here (and we love it)

Adobe’s new Project Neo web app will likely come out of beta in 2025, perhaps in time for Adobe MAX, and will continue to enable artists to create simple 3D models, which can then be easily sent to Adobe Illustrator, where again they’re turned into vector files to compile into a scene.

These developments will further unite the worlds of 3D and 2D, with an increasing number of artists opting to create low-poly art. Other similar 3D modelling apps are available, such as Womp – an app our writer tested for her 3D printing projects – that are proving popular with 2D artists trying 3D modelling for the first time. As a result, we’ll see a rise in popularity of this kind of stylised, low-poly modelling, likely emerging across social media like TikTok..

11. Mobile 3D modelling will grow in popularity

With Shapelab Lite being released for Quest 3, we’re beginning to see the possibility of more complex 3D modelling on smaller and less powerful mobile devices. The applications bring the essential features of the Shapelab PCVR app to standalone VR at a more accessible price.

The release of ZBrush for iPad also marks a stake in the ground, showing Maxon’s commitment to making its modelling tools accessible on mobile. I expect we’ll see Maxon develop this app even further in 2025.

While there’s no official news, I would love to see Procreate will introduce a 3D app in 2025, bringing its brilliant approach digital art creation and intuitive design to 3D modelling. Procreate already supports 3D painting for existing models, but could the dev really push into full 3D sculpting? I’d love to see it, even for stylised low-poly modelling in the vein of Womp.