Blumhouse Productions is known for making terrifying movies on a tight budget. Now the horror movie studio behind the likes of Paranormal Activity, Five Nights at Freddy’s and BlacKkKlansman is working with Meta to test Meta Movie Gen, the AI video generator that the owner of Facebook and Instagram revealed earlier this month. And that could be a scary combination.

Select creatives are taking part in the pilot, including Casey Affleck from I’m Still Here and Light of My Life, Aneesh Chaganty, and the Spurlock Sisters, who are part of Blumhouse’s first annual Screamwriting Fellowship. Aneesh has already produced a video using Meta’s AI tool in which he says he was all against AI until he realised that his ten-year-old self would have loved it for his homemade movies.

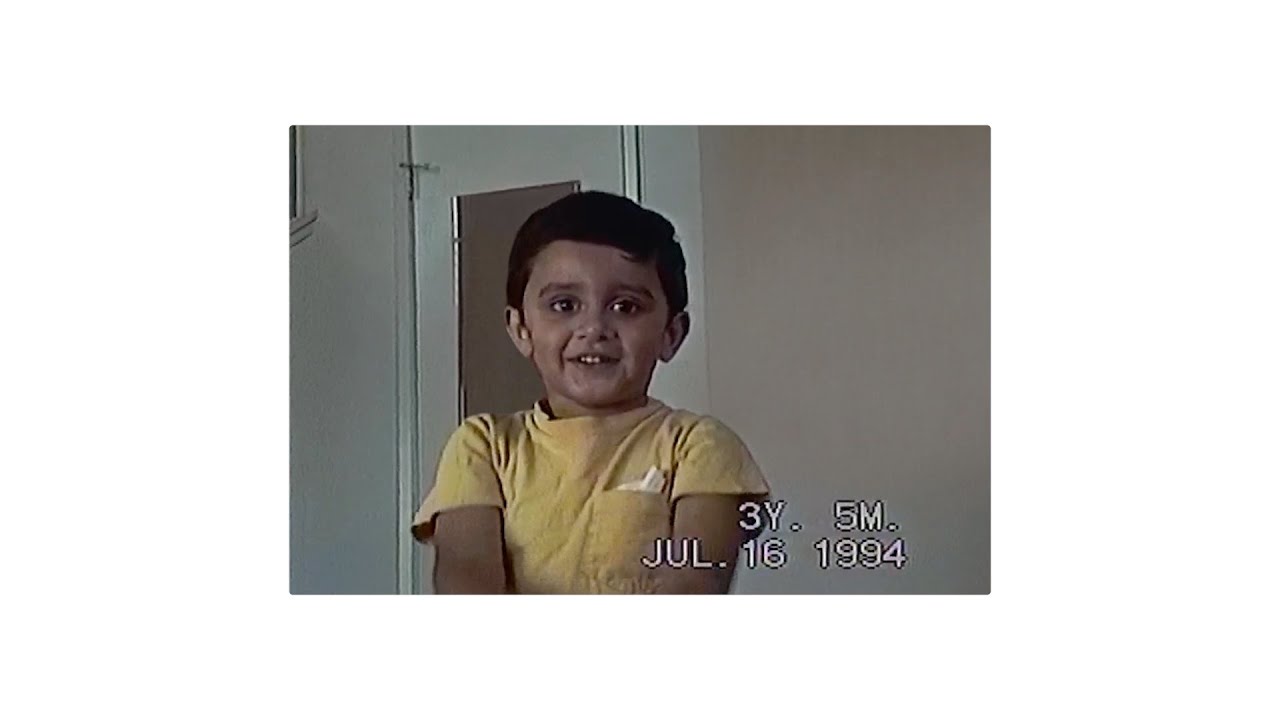

For the video above, Aneesh used Meta Movie Gen to change the backdrops or add special effects to short movies he made as a kid “I hate AI. But with a tool like this? I dunno… maybe I’d have just dreamed a little bigger,” he says, noting that what he likes about the tool is that “little Aneesh would still have had to make the movie.”

It’s an emotive and clever plug for the tech that highlights its potential for human-led storytelling while also excusing the current limited quality of the AI output. I mean, sure, it is good enough for a kid’s home movie. I would have been thrilled to have had access to this tech when I was a kid trying to produce Doctor Who episodes in the lunch break at primary school.

Blumhouse founder and CEO Jason Blum continues the tone in his statement in Meta’s announcement. “Artists are, and forever will be, the lifeblood of our industry,” he says. “Innovation and tools that can help those artists better tell their stories is something we are always keen to explore, and we welcomed the chance for some of them to test this cutting-edge technology and give their notes on its pros and cons while it’s still in development.”

He adds: “These are going to be powerful tools for directors, and it’s important to engage the creative industry in their development to make sure they’re best suited for the job.”

As for Meta, it says Movie Gen will allow creatives to more quickly express ideas and “explore visual direction, tone and mood.” “We heard that filmmakers see potential for Movie Gen as a collaborator and thought partner, with its unexpected response to text prompts inspiring new ideas,” it said.

It also seems that public access to Movie Gen might arrive sooner than we thought. Meta initially said it had no plans to open access “anytime soon”. But CEO Mark Zuckerberg posted on Instagram to say Movie Gen would be coming to the platform next year (he made the announcement via a video of himself doing leg presses in different historical eras – you can see that gem below).

Connor Hayes, VP of gen AI, has confirmed that timeframe. “While we’re not planning to incorporate Movie Gen models into any public products until next year, Meta feels it’s important to have an open and early dialogue with the creative community about how it can be the most useful tool for creativity and ensure its responsible use,” he said.

Meta’s collaboration with Blumhouse is another example of how studios are starting to take AI video generation seriously. It comes shortly after Runway entered into a deal to create a bespoke AI model for the film and TV producer Lionsgate.

Meta says Movie Gen can currently create videos of up to 16 seconds in different aspect ratios and audio of up to 45 seconds and that it compares well against Runway, OpenAI’s Sora and Kling. If it gets a release, Meta’s AI video generator is also likely to compete against Adobe Firefly Video, which is already powering the new Generative Extend in Premiere Pro.

It’s not clear how the model was trained, however. Meta has said that Movie Gen was trained on a “a combination of licensed and publicly available datasets” but hasn’t specified which. Meta has access to vast amounts of video data through its products like Instagram and Facebook.