AI art is mired controversy, so it should be no surprise that Meta’s confirmation that it trains its AI image generator using public Instagram images has upset a lot of people.

The revelation could be the straw that broke the camel’s back for many users of the social media platform. Already frustrated by the decrease in reach that their posts receive these days on a platform increasingly saturated with paid ads, many users, and particularly artists, are wondering if there’s any point to Instagram anymore. It might be time to consider alternative among the best social media platforms for artists and designers.

A photo posted by on

It’s something that we always suspected, but chief product officer Chris Cox confirmed that Meta company is using Instagram images to train its AI image generator at the Bloomberg Tech Summit when he highlighted this as the key to the next-generation models “amazing quality images”. He noted the broad range of the dataset in Instagram, covering everything from art and fashion to personal family photos and travel saps.

Creators are angry, saying that they were unaware of the use and that Meta did not secure consent. Many artists have since been publishing posts and stories on Instagram to declare ownership of the copyright of their work and to say that they do not consent to its use to train AI.

Such posts are likely to have little impact on Meta’s stance if the small print in Instagram’s terms and conditions allow Meta to do what it’s doing. Potentially more impactful could be many artists’ decisions to delete their content and leave the platform (some are suggesting the Cara app as an alternative). However, many others say they fear they don’t have the energy for yet another social media platform.

A post shared by PocahStudios (@pocahstudio)

A photo posted by on

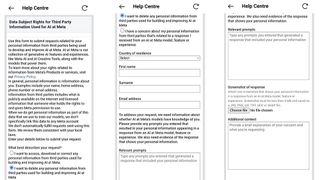

If there anything else we can do? There is for some people, although Meta’s making it as difficult as possible. Because of EU laws, users in the European Union do have a a chance to opt out. Alas, there’s no easy ‘opt out’ button. That would be far too transparent and ethical. Instead, Meta has buried away an option to “request” an opt out. And even if you find it, the process is designed in a way that seems designed to put people off using it.

To try to opt out from Meta’s use of Instagram data to train AI, you can go to Settings > Help > Help Center > About AI on Instagram > Learn about How Meta uses information for generative AI models and features. There among reams of text, one confusing paragraph reads: “To help bring these experiences to you, we’ll now rely on the legal basis called legitimate interests for using your information to develop and improve Al at Meta. This means you have the right to object to how your information is used for these purposes. If your objection is honored, it will be applied going forward.”

Under EU law, Meta, has to allow an opt out, but the phrasing here seems intended to suggest that the decision is entirely up to Meta and that there’s a slim change of it happening. If that doesn’t put you off and you decide to click next, you’re hit with a form that asks for an explanation of your request, including evidence of your personal information appearing in Meta’s AI output. You then have to jump through authentication hoops to send it.

Users of Reddit who have completed the form report receiving the following message: “Thank you for contacting us. We don’t automatically fulfill requests and we review them consistent with your local laws.” In short, it’s an nontransparent, unethical abomination that shows an extraordinary lack of any kind of respect for users. Meta insists that its use of user content is legal.

On using user data to train AI models, “We don’t train on private stuff, we don’t train on stuff people share with their friends. We do train on things that are public,” @Meta Chief Product Officer Chris Cox #BloombergTech pic.twitter.com/FC0SWlTgqYMay 9, 2024

Meta says that it only uses public posts, so another way to avoid helping to train its AI is to make your account private, but that’s hardly an option for those wanting to use the platform to promote their work. There’s also the option of using anti-AI tools like Nightshade, which apply imperceptible distortions to images to make them unusable for AI training, or in the case of Nightshade, to even potentially corrupt the AI training data.

If you want out and aren’t sure how to go about it, see our pieces on how to delete an Instagram account and how to download Instagram photos.